Key Concepts¶

Listed below are the key concepts of WSO2 Micro Integrator.

Message entry points¶

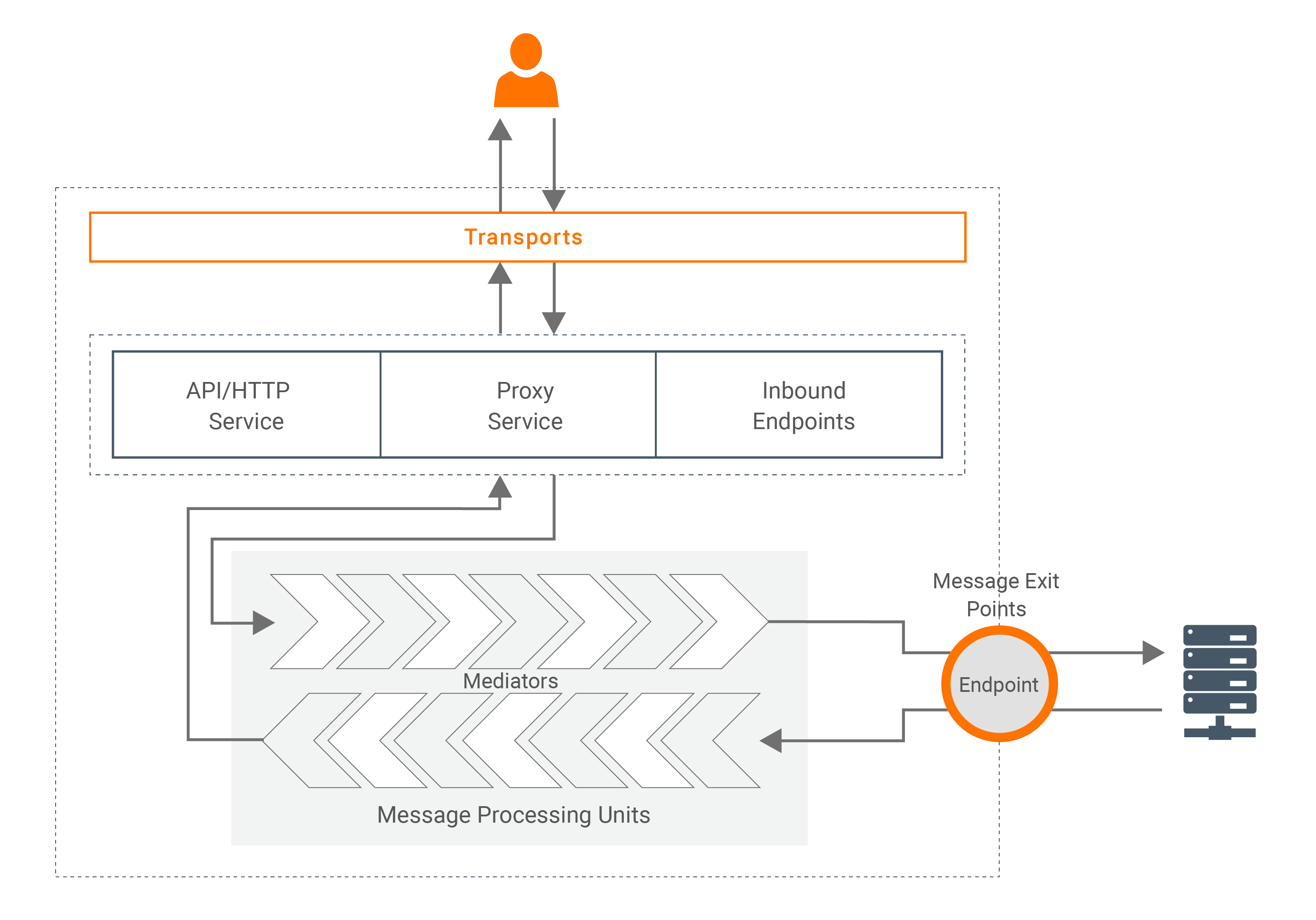

Message entry points are the entities that a message can enter into the Micro Integrator mediation flow.

REST APIs¶

A REST API in WSO2 Micro Integrator is analogous to a web application deployed in the web container. The REST API will receive messages over the HTTP/S protocol, performs the necessary transformations and processing, and then forwards the messages to a given endpoint. Each API is anchored at a user-defined URL context, much like how a web application deployed in a servlet container is anchored at a fixed URL context. An API will only process requests that fall under its URL context. An API is made of one or more Resources, which are logical components of an API that can be accessed by making a particular type of HTTP call.

Proxy Services¶

A Proxy service is a virtual service that receives messages and optionally processes them before forwarding to a service at a given endpoint. This approach allows you to perform the necessary message transformations and introduce additional functionality to your services without changing your actual services. Unlike in REST APIs, here the protocol does not always need to be HTTP/S. Proxy Services do support any well known protocols including HTTP/S, JMS, FTP, FIX, and HL7.

Inbound Endpoints¶

In Proxy services and REST APIs some part of the configuration is global to a particular instance. For an example, HTTP port need to be common for all the REST APIs. The Inbound Endpoints does not contain such global configurations. That gives an extra flexibility in configuring the Inbound Endpoints compared to other two message entry points.

Message processing units¶

Mediators¶

Mediators are individual processing units that perform a specific function on messages that pass through the Micro Integrator. The mediator takes the message received by the message entry point (Proxy service, REST API, or Inbound Endpoint), carries out some predefined actions on it (such as transforming, enriching, filtering), and outputs the modified message.

Mediation Sequences¶

A mediation sequence is a set of mediators organized into a logical flow, allowing you to implement pipes and filter patterns. The mediators in the sequence will perform the necessary message processing and route the message to the required destination.

Message Stores and Processors¶

A Message Store is used by a mediation sequence to temporarily store messages before they are delivered to their destination. This approach is useful for several scenarios. 1. Serving traffic to back-end services that can only accept messages at a given rate, whereas incoming traffic arrives at different rates. This use case is called request rate matching. 2. If the back-end service is not available at a particular moment, the message can be kept safely inside the message store until the back-end service become available. This use case is called Guaranteed delivery

The task of the Message Processor is to pick the messages stored in the Message Store and deliver it to the destination.

Templates¶

A large number of configuration files in the form of sequences and endpoints, and transformations can be required to satisfy all the mediation requirements of your system. To keep your configurations manageable, it is important to avoid scattering configuration files across different locations and to avoid duplicating redundant configurations. Templates help minimize this redundancy by creating prototypes that users can use and reuse when needed. WSO2 Micro Integrator can template sequences and endpoints.

Message exit points¶

Endpoints¶

A message exit point or an endpoint defines an external destination for a message. An endpoint could represent a URL, a mailbox, a JMS queue, a TCP socket, etc. along with the settings needed for the connection.

Connectors¶

Connectors allow your mediation flows to connect and interact with external services such as Twitter and Salesforce. Typically, connectors are used to wrap the API of an external service. It is also a collection of mediation templates that define specific operations that should be performed on the service. Each connector provides operations that perform different actions in that service. For example, the Twitter connector has operations for creating a tweet, getting a user's followers, and more.

To download a required connector, go to the WSO2 Connector Store.

Data Services¶

The data in your organization can be a complex pool of information that is stored in heterogeneous systems. Data services are created for the purpose of decoupling the data from its infrastructure. In other words, when you create a data service in WSO2 Micro Integrator, the data that is stored in a storage system (such as an RDBMS) can be exposed in the form of a service. This allows users (that may be any application or system) to access the data without interacting with the original source of the data. Data services are, thereby, a convenient interface for interacting with the database layer in your organization.

A data service in WSO2 Micro Integrator is a SOAP-based web service by default. However, you also have the option of creating REST resources, which allows applications and systems consuming the data service to have both SOAP-based, and RESTful access to your data.

Other concepts¶

Scheduled Tasks¶

Executing an integration process at a specified time is a common requirement in enterprise integration.

For example, in an organization, there can be a need for running an integration process to synchronize two systems

every day at the day end.

In Micro Integrator, execution of a message mediation process can be automated to run periodically by using

a Scheduled task. You can schedule a task to run in the time interval of 't' for 'n' number of times or to run once

the Micro Integrator starts.

Furthermore, you can use cron expressions for more advanced executing time configuration.

Transports¶

A transport protocol is responsible for carrying messages that are in a specific format. WSO2 Micro Integrator supports all the widely used transports including HTTP/S, JMS, VFS, as well as domain-specific transports like FIX. Each transport provides a receiver implementation for receiving messages, and a sender implementation for sending messages.

Registry¶

WSO2 Micro Integrator uses a registry to store various configurations and resources such as endpoints. A registry is simply a content store and a metadata repository. Various resources such as XSLT scripts, WSDLs, and configuration files can be stored in a registry and referred to by a key, which is a path similar to a UNIX file path. The WSO2 Micro Integrator uses a file-based registry that is configured by default. When you develop your integration artifacts, you can also define and use a local registry.

Message Builders and Formatters¶

When a message comes in to WSO2 Micro Integrator, the receiving transport selects a message builder based on the message's content type. It uses that builder to process the message's raw payload data and converts it to common XML, which the mediation engine of WSO2 Micro Integrator can then read and understand. WSO2 Micro Integrator includes message builders for text-based and binary content.

Conversely, before a transport sends a message out from WSO2 Micro Integrator, a message formatter is used to build the outgoing stream from the message back into its original format. As with message builders, the message formatter is selected based on the message's content type. You can implement new message builders and formatters for custom requirements.

Streaming Integration¶

| Concept | Description |

|---|---|

| Streaming Data | A continuous flow of data generated by one or more sources. A data stream is often made of discrete data bundles that are transmitted one after the other. These data bundles are often referred to as events or messages. |

| Stream Processing | A paradigm for processing streaming data. Stream processing lets users process continuous streams of data on the fly and derive results faster in near-real-time. This contrasts with conventional processing methods that require data to be stored prior to processing. |

| Streaming Data Integration | The process that makes streaming data available for downstream destinations. Each consumer may expect data in different formats, protocols, or mediums (DB, file, Network, etc.). These requirements are addressed via Streaming Data Integration that involves converting data into different formats and publishing data in different protocols through different mediums. The data data is often processed using Stream Processing and other techniques to add value before integrating data with its consumers. |

| Streaming Integration | In contrast to Streaming Data Integration that only focuses on making streaming data available for downstream, Streaming Integration involves integrating streaming data as well as trigger action based on data streams. The action can be a single request to a service or a complex enterprise integration flow. |

| Data Transformation | This refers to converting data from one format to another (e.g, JSON to XML) or altering the original structure of data. |

| Data Cleansing | This refers to filtering out corrupted, inaccurate or irrelevant data from a data stream based on one or more conditions. Data cleansing also involves modifying/replacing content to hide/remove unwanted data parts from a message (e.g., obscuring). |

| Data Correlation | This refers to combining different data items based on relationships between data. In the context of streaming data, it is often required to correlate data across multiple streams received from different sources. The relationship can be expressed in terms of a boolean condition, sequence or a pattern of occurrences of data items. |

| Data Enrichment | The process of adding value to raw data by enhancing and improving data by adding more information that is more consumable for users. |

| Data Summarization | This refers to aggregating data to produce a summarized representation of raw data. In the context of Stream Processing, such aggregations can be done based on temporal criteria in real-time with moving time windows. |

| Real-time ETL | This refers to performing data extraction, transformation, and loading in real-time. In contrast to traditional ETL that uses batch processing, Real-time ETL extracts data as and when it is available, transforms it on the fly, and integrates it. |

| Change Data Capture | A technique or a process that makes it possible to capture changes in the data in real-time. This enables real-time ETL with databases because it allows users to receive real-time notifications when data in the database tables are changing. |