Publishing Aggregated Events to the Amazon AWS S3 Bucket¶

Purpose:¶

This application demonstrates how to publish aggregated events to Amazon AWS S3 bucket via the siddhi-io-s3 sink

extension. In this sample the events received to the StockQuoteStream stream are aggregated by the

StockQuoteWindow window, and then published to the Amazon S3 bucket specified via the bucket.name parameter.

Before you begin:

- Create an AWS account and set the credentials following the instructions in the AWS Developer Guide.

- Save the sample Siddhi application in Streaming Integrator Tooling.

Executing the Sample:¶

To execute the sample, follow the procedure below:

-

In Streaming Integrator Tooling, click Open and then click AmazonS3SinkSample.siddhi in the workspace directory. Then update it as follows:

-

Enter the credential.provider class name as the value for the

credential.providerparameter. If the class is not specified, the default credential provider chain is used. -

For the

bucket.nameparameter, enterAWSBUCKETas the value. -

Save the Siddhi application.

-

-

Start the Siddhi application by clicking the Start button (shown below) or by clicking by clicking Run => Run.

If the Siddhi application starts successfully, the following message appears in the console.

AmazonS3SinkSample.siddhi - Started Successfully!.

Testing the Sample¶

To test the sample Siddhi application, simulate random events for it via the Streaming Integrator Tooling as follows:

-

To open the Event Simulator, click the Event Simulator icon.

-

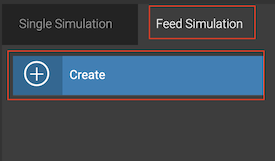

In the Event Simulator panel, click Feed Simulation -> Create.

-

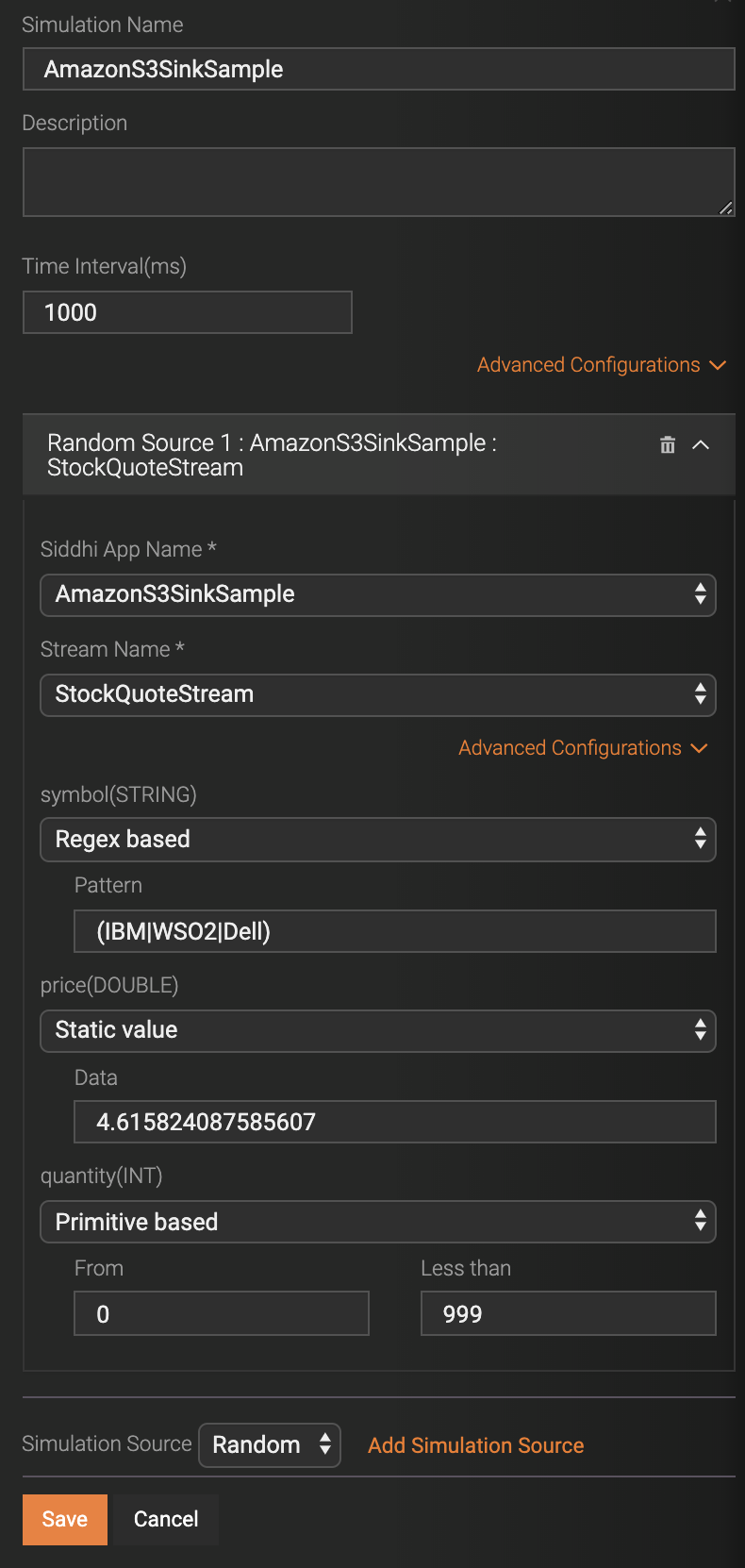

In the new panel that opens, enter information as follows.

-

In the Simulation Name field, enter

AmazonS3SinkSampleas the name for the simulation. -

Select Random as the simulation source and then click Add Simulation Source.

-

In the Siddhi App Name field, select AmazonS3SinkSample.

-

In the Stream Name field, select StockQuoteStream.

-

In the symbol(STRING) field, select Regex based. Then in the Pattern field that appears, enter

(IBM|WSO2|Dell)as the pattern.Tip

When you use the

(IBM|WSO2|Dell)pattern, onlyIBM,WSO2, andDellare selected as values for thesymbolattribute of theStockQuoteStream. Using a few values for thesymbolattribute is helpful when you verify the output because the output is grouped by the symbol. -

In the price(DOUBLE) field, select Static value.

-

In the quantity(INT) field, select Primitive based.

-

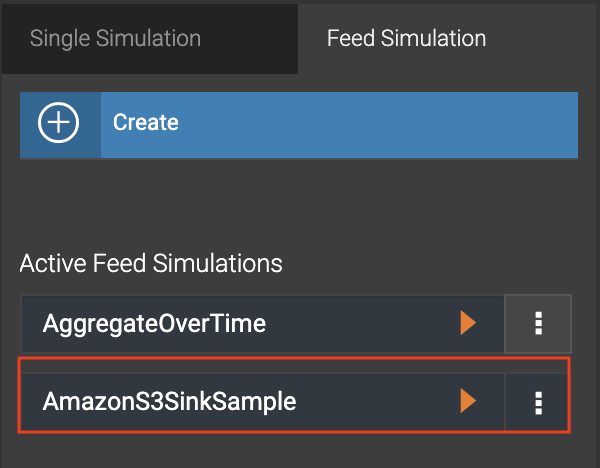

Save the simulator configuration by clicking Save. Th.e simulation is added to the list of saved feed simulations as shown below.

-

-

To simulate random events, click the Start button next to the AmazonS3SinkSample simulator.

Viewing results¶

Once the events are sent, check the S3 bucket. Objects are created with 3 events in each.

Click here to view the sample Siddhi application.

@App:name("AmazonS3SinkSample")

@App:description("Publish events to Amazon AWS S3")

define window StockQuoteWindow(symbol string, price double, quantity int) lengthBatch(3) output all events;

define stream StockQuoteStream(symbol string, price double, quantity int);

@sink(type='s3', bucket.name='<BUCKET_NAME>', object.path='stocks',

credential.provider='com.amazonaws.auth.profile.ProfileCredentialsProvider', node.id='zeus',

@map(type='json', enclosing.element='$.stocks',

@payload("""{"symbol": "{{symbol}}", "price": "{{price}}", "quantity": "{{quantity}}"}""")))

define stream StorageOutputStream (symbol string, price double, quantity int);

from StockQuoteStream

insert into StockQuoteWindow;

from StockQuoteWindow

select *

insert into StorageOutputStream;